A neural network architecture is the design blueprint of how artificial neurons are arranged and connected inside an AI model. It defines the structure of the neural network, including the number of layers, the flow of data between them, and the functions that enable it to learn. The key components of a neural network include the input layer, hidden layers, and output layer, each playing a crucial role in processing information. The architecture specifies how the input layer receives raw data, how each layer connects to the next, and how the output layer produces the final result. Each layer connects to the next through a network of interconnected nodes, allowing information to be processed collaboratively and enabling complex learning tasks to be accomplished. The input and output layers are fundamental to the architecture: the input layer receives raw data such as images or text, while the output layer generates the model’s predictions or classifications. Think of it as the floor plan of an AI system: without the right layout, the model can’t do its job effectively.

Why should business leaders care? The choice of neural network architecture determines how well AI performs in real-world scenarios, ranging from fraud detection in finance to personalized product recommendations in e-commerce. A good design saves money, improves accuracy, and makes adoption less risky. A poor one? It drains budgets with little impact.

The quiet revolution: from simple layers to transformers

In the last decade, AI has gone from experimental to mainstream. That leap was fueled not just by bigger datasets or faster chips, but by smarter deep neural network architectures. Deep learning neural networks, which are composed of multiple layers, have enabled significant advances in pattern recognition and data processing. Early models, such as feedforward networks, laid the foundation. Convolutional neural networks (CNNs) enabled machines to “see,” recurrent networks, a broader category that includes recurrent neural networks (RNNs) and their variants, helped them “remember,” and transformer neural networks reshaped how we handle language, multimodal data, and generative AI.

At the center of this progress sits the attention mechanism — a concept that lets AI focus on what matters most in a sequence of data. Without attention, large language models like GPT or BERT wouldn’t exist, and today’s wave of enterprise AI adoption would look very different.

Why architecture choice matters for enterprises

For companies weighing AI adoption, understanding neural network design is not academic trivia; it’s a business necessity. Each architecture offers strengths and trade-offs. A retailer predicting demand spikes doesn’t need the same setup as a hospital analyzing MRI scans. Choosing the wrong one often means wasted time, higher costs, and failed pilots. Selecting the right machine learning architecture is crucial for ensuring scalability, maintainability, and optimal performance in real-world applications.

Here’s what clarity on architecture brings to the table:

- Strategic fit — Aligns the AI system with specific business goals (prediction vs. generation, images vs. text).

- Cost control — Optimizes infrastructure and training budgets by avoiding overly complex models.

- Scalability — Supports enterprise growth without rebuilding from scratch.

- Risk reduction — Prevents compliance and performance issues tied to misapplied models.

- Innovation speed — Shortens the gap between proof-of-concept and production-level impact.

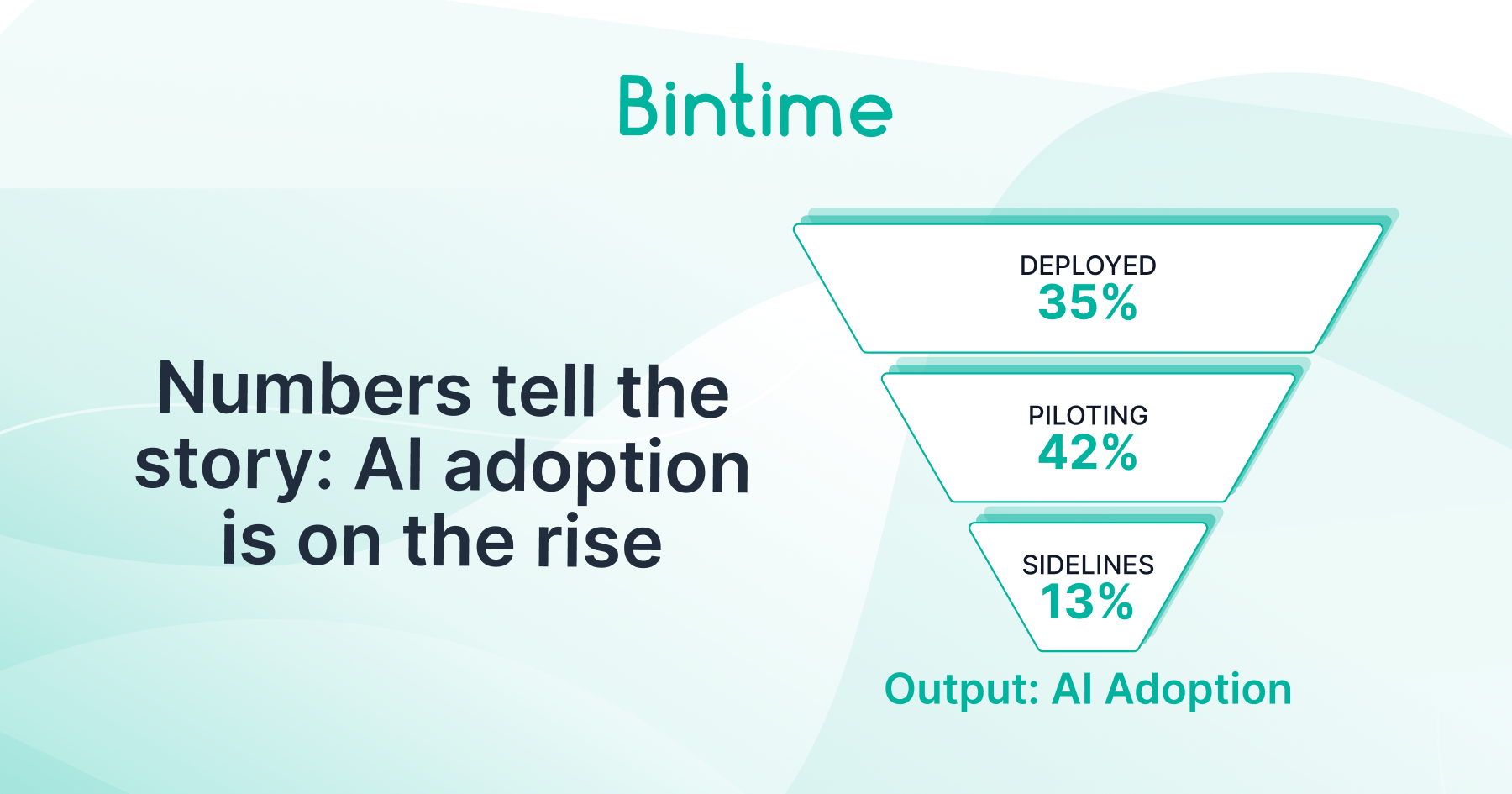

The numbers don’t lie: AI adoption is accelerating

If this feels theoretical, the market data says otherwise:

- 35% of businesses have fully deployed AI in at least one function, while 42% are piloting or experimenting. Only 13% are still on the sidelines.

- The global AI market was valued at $454 billion in 2022 and is projected to grow at a 37.3% CAGR through 2030, with neural networks driving much of this surge.

- The artificial neural network software market is projected to keep expanding at a compound annual growth rate of about 22.4%, fueled by rising enterprise demand for deep learning, generative AI, and predictive analytics in 2025 and beyond.

- The global neural network market itself is rising fast: from $160.8 million in 2021 to a projected $743 million by 2030.

- Machine learning, heavily dependent on neural network structures, is one of the most in-demand AI skillsets. The ML market is forecast to reach $568.32 billion by 2031.

- Adoption is strongest in healthcare, finance, manufacturing, and retail, where neural networks and other machine learning models are used for predictive modeling and regression analysis tasks such as diagnostics, risk analysis, predictive maintenance, and customer behavior modeling.

- Key drivers include cost reduction, automation needs, labor shortages, and the demand for complex data insights, making AI adoption less optional and more inevitable.

Almost all companies are investing in AI, but just 1% call themselves mature. While 92% of leaders plan to increase spending over the next three years, the biggest barrier isn’t technology or employees, but rather leadership alignment. Employees are already using AI more than leaders realize, but without the right architectural and strategic choices, those pilots stall instead of scale. (McKinsey, Superagency in the Workplace, 2025)

Neural network architecture is the foundation of business AI. It defines how models process data, impacts cost and accuracy, and makes or breaks enterprise adoption. Modern breakthroughs, such as attention mechanisms and transformers, have turned AI from a niche tool into a central part of day-to-day operations.

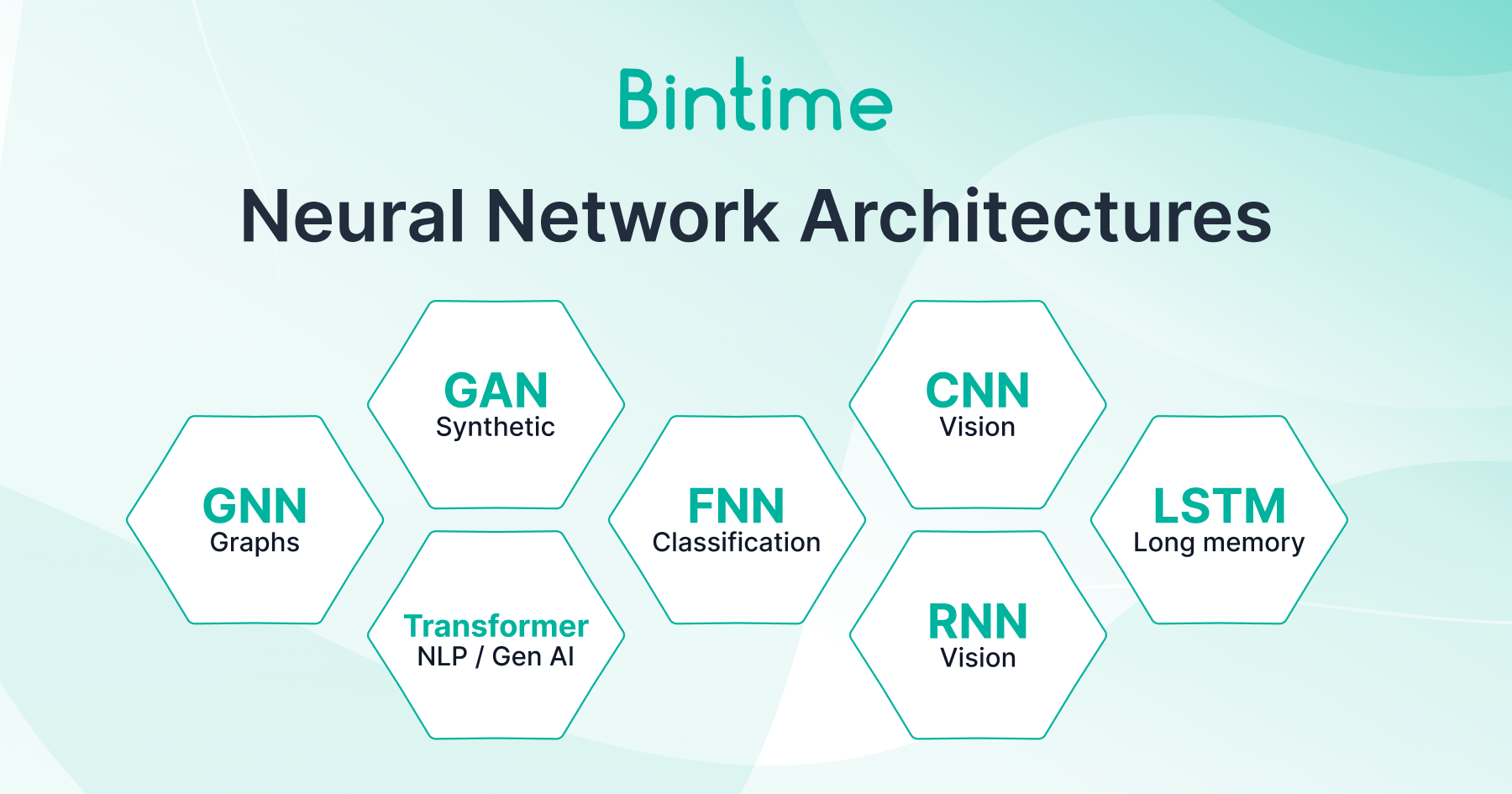

Types of Neural Networks and Their Core Architectures

Neural networks come in many forms, but the most widely used architectures fall into several families: from simple perceptrons to modern transformers. Each has its own structure, learning method, and real-world strengths.

Artificial neural networks are specifically designed to uncover the underlying structure of input data and can be applied to various tasks such as classification, pattern recognition, and predictive analytics. Neural networks are often implemented as part of a machine learning algorithm, which processes input data to learn patterns for both supervised and unsupervised learning tasks.

Think of them as tools in a kit: you wouldn’t use a wrench to cut wood. Choosing the right neural network architecture means aligning the model’s structure with the business problem. The choice of architecture also impacts how a machine learning model is integrated and deployed in real-world systems, affecting real-time prediction capabilities and the need for continuous retraining to maintain accuracy.

As one technical deep dive explains, “Neural networks, algorithms designed to simulate the neural connections in the brains of biological entities, have become pivotal in solving various machine learning tasks.”

Read more in Neural Networks: Types, Structure, and Characteristics.

Perceptron & multilayer perceptron (MLP)

- Perceptron: Introduced in 1958 by Frank Rosenblatt, the perceptron is the simplest type of neural network, consisting of a single artificial neuron used for linearly separable tasks. Each artificial neuron computes a weighted sum of its inputs, which is then passed through an activation function to produce the output. During supervised learning, the perceptron is trained to match its predictions to the desired outputs, which serve as targets for learning. It laid the foundation for everything that came after.

- Multilayer Perceptron (MLP): An extension of perceptrons with multiple hidden layers, often using sigmoid or ReLU activations.

Core features: Input nodes → hidden layers → output nodes. Fully connected.

Best for: Non-linear classification and regression problems.

Use cases: Early fraud detection models, structured business forecasting.

Feedforward neural networks (FNNs)

A feedforward neural network (FNN) is the simplest type of neural net: data moves only in one direction, from input to output. No loops, no memory: just straight processing.

- Core features: Input → hidden layers → output layer. The output layer is responsible for generating the predicted output of the network.

- Best for: Structured data and simple classification tasks.

- Use cases: Customer churn prediction, credit scoring, or sales forecasting.

- Why it matters for business: Low cost and fast to deploy, making it ideal for early AI experiments.

During the training process, the network adjusts its weights to minimize the difference between the predicted output and the actual target. The network learns from training data, and tuning hyperparameters such as the learning rate is crucial for optimizing the speed and accuracy of convergence.

Convolutional neural networks (CNNs)

Convolutional neural networks are designed to recognize spatial patterns. They use convolutional layers with filters to detect edges, textures, and shapes, followed by pooling layers to compress information, and then fully connected layers for classification. CNNs excel at processing data such as images by extracting hierarchical features that improve analysis and model performance.

- Core features: Convolutional + pooling layers for feature extraction; highly efficient for images.

- Best for: Image and video analysis, object detection, face recognition, image recognition, medical image analysis.

- Use cases: Medical imaging (tumor detection), product quality inspection, eCommerce visual search, image recognition, medical image analysis.

- Why it matters for business: CNNs dominate computer vision applications across healthcare, manufacturing, and retail.

Recurrent neural networks (RNNs)

A recurrent neural network (RNN) is a type of recurrent network designed for sequence modeling. Instead of processing each input independently, it maintains a hidden state that carries context from previous steps, enabling sequence learning. RNNs are trained through an iterative process, where the model repeatedly updates its weights based on sequential data to improve performance.

- Core features: Feedback loops; trained via backpropagation through time.

- Best for: Sequential and time-series data.

- Use cases: Stock price forecasting, demand planning, customer sentiment analysis.

- Why it matters for business: RNNs make sense of changing data streams, though they struggle with long-term dependencies.

LSTM Networks

Long Short-Term Memory (LSTM) networks extend RNNs by adding gated cell states that decide what information to keep or forget.

- Best for: Speech recognition, predictive maintenance, time-series forecasting.

- Why it matters for business: LSTMs made sequence-based AI commercially viable before transformers took over language tasks.

Transformers

Transformers use attention mechanisms to weigh the importance of data points. Unlike RNNs, they process sequences in parallel, making them faster and more scalable.

- Core features: Self-attention, multi-head attention, encoder-decoder stacks.

- Best for: Natural language processing, generative AI, multimodal data.

- Use cases:

- OpenAI GPT-4 generating text/code.

- Google Assistant combining RNNs + transformers for conversational AI.

- Enterprise AI assistants (customer service, translation, fraud detection).

Other architectures emerging in 2025

While CNNs, RNNs, and transformers dominate, businesses are experimenting with other neural network architecture types:

- Generative Adversarial Networks (GANs): Two networks (generator + discriminator) compete, producing realistic synthetic data.

- Use cases: Synthetic product photos, fraud detection, data augmentation.

- Variational Autoencoders (VAEs): Use an encoder-decoder architecture to encode data into a latent space, thereby preserving the original data’s properties, which is valuable for market research and data privacy.

- Radial Basis Function (RBF) Networks: Use distance-based activation functions for classification and interpolation.

- Use cases: Real-time fraud detection, control systems.

- Deep Belief Networks (DBNs): Stacked Restricted Boltzmann Machines, useful for feature extraction.

- Use cases: Dimensionality reduction, early vision tasks.

- Graph Neural Networks (GNNs): Designed for graph-structured data with message-passing mechanisms.

- Use cases: Social network analysis, supply chain optimization, drug discovery.

These newer types reflect how neural network structures evolve with data complexity and enterprise demand.

Real-life applications of neural networks (2025 snapshot)

- Autonomous transportation: Tesla Autopilot uses CNNs for perception. Evaluating model performance on unseen data is crucial to ensure reliability in real-world driving scenarios.

- Medical diagnostics: Aidoc uses CNNs to flag anomalies in CT scans. Assessing the system on unseen data helps validate its predictive accuracy and robustness in clinical settings.

- Education: DreamBox applies neural nets to adapt math lessons.

- Fraud detection: PayPal relies on anomaly-detection neural networks.

- Agriculture: John Deere applies CNNs + GNNs for crop optimization.

- Robotics: Boston Dynamics’ Spot navigates via neural network–based controllers. Deploying a trained model is essential for accurate predictions and safe, reliable operation in dynamic environments.

- Language AI: OpenAI GPT-4 (transformer) powers creative tasks, summarization, and code generation. A trained model enables high-quality outputs and dependable performance across diverse language tasks.

- Generative AI: GANs are used for image generation, such as creating images from text descriptions, image-to-image translation, and producing realistic visuals for various applications.

Quick comparison: neural network architectures

| Architecture | Key Features | Best For | Example Use Case |

| Feedforward Neural Network (FNN) | Simple, one-way flow | Structured data, classification | Predicting customer churn |

| Convolutional Neural Network (CNN) | Convolutions + pooling | Image/video recognition | Detecting defects in products |

| Recurrent Neural Network (RNN) | Hidden state, sequential memory | Time-series & sequential data | Demand forecasting |

| Long Short-Term Memory (LSTM) | Gated cell states | Long-sequence learning | Speech recognition |

| Transformer Neural Network | Attention-based, parallelizable | NLP, generative AI, multimodal | AI shopping assistant |

| Generative Adversarial Network (GAN) | Generator + discriminator | Data synthesis | Creating synthetic product images |

| Graph Neural Network (GNN) | Message passing on graphs | Relational/graph data | Supply chain optimization |

Why neural network architecture matters for adoption

The architecture defines not only how a model learns, but also whether it can scale in business:

- Learning capacity: Determines how complex patterns a model can capture.

- Computational efficiency: Impacts training costs and scalability.

- Robustness: Well-structured models tolerate noise and adapt to change.

- Domain-specific fit: CNNs for vision, RNNs/LSTMs for sequences, transformers for multimodal AI.

- Transparency and interpretability: Essential for compliance-heavy industries.

A well-chosen architecture increases trust and usefulness, which accelerates adoption across sectors. Poorly matched architectures waste resources and stall enterprise AI maturity.

The core types of neural networks in 2025 are FNNs, CNNs, RNNs/LSTMs, and transformers; however, emerging models like GANs and GNNs are expanding the use cases. The architecture choice matters because it directly impacts performance, cost, and trust, thereby influencing how quickly enterprises adopt AI.

Attention Mechanisms — Why They Matter Now

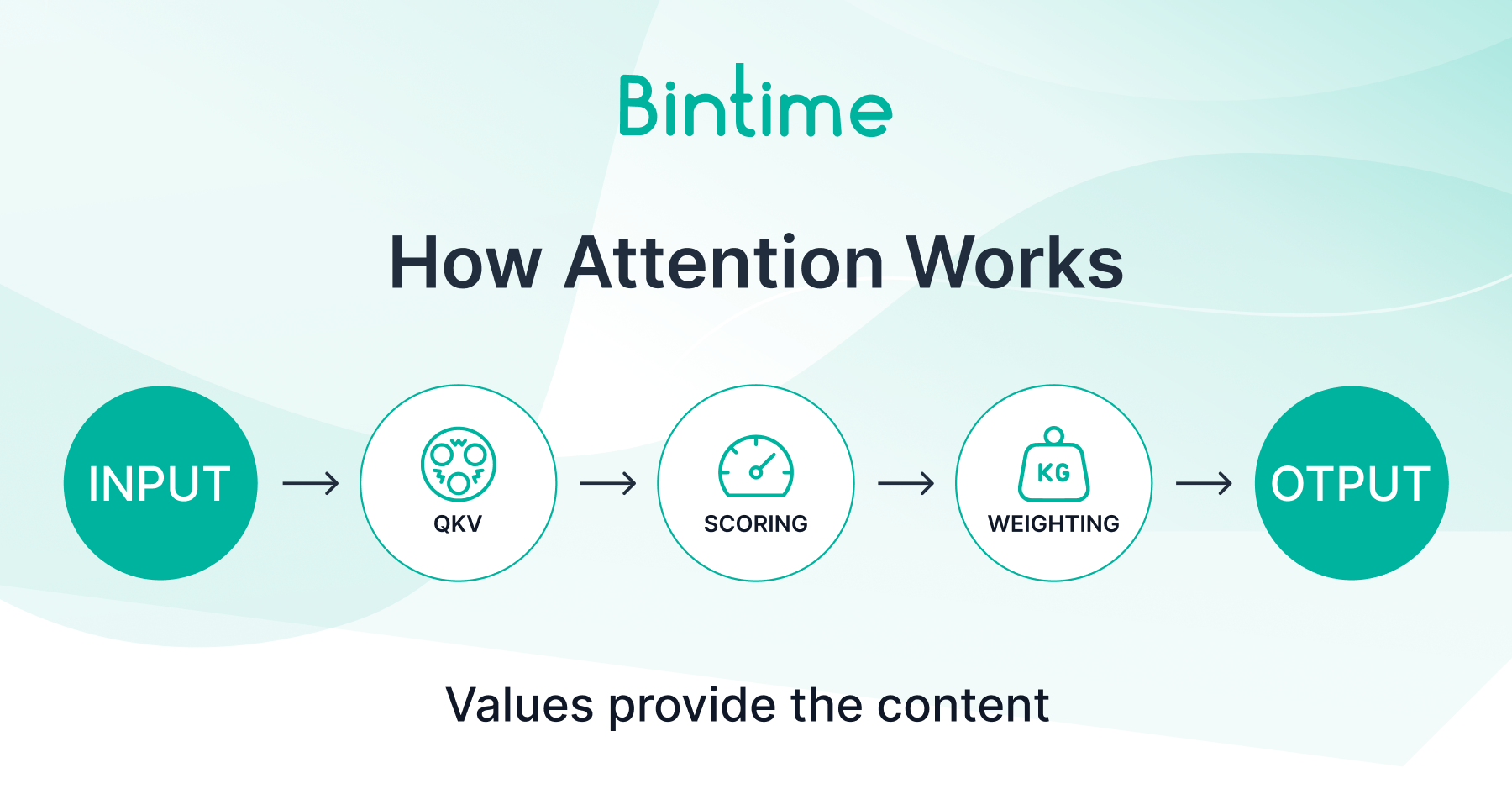

Attention is a method that lets a neural network decide which parts of the input data deserve more focus when making predictions. Instead of treating every input equally, the model learns to assign different weights of importance, much like how humans pay attention to keywords in a sentence or to certain details in an image.

This seemingly small tweak reshaped deep learning, powering transformer neural networks, large language models, and hybrid systems that outperform older CNNs and RNNs on complex tasks.

Why attention matters

Traditional neural networks struggle when inputs are long or complex:

- CNNs are great at images, but don’t capture context beyond local features.

- RNNs can model sequences, but their performance weakens as the data becomes increasingly sparse.

- Attention solved this bottleneck by allowing models to examine all inputs simultaneously and determine which ones are most important. That’s why transformers scaled from translation tasks to generative AI.

Core types of attention in 2025

The main types of attention used in deep learning today are self-attention, multi-head attention, and cross-attention. Each allows a model to focus on the most relevant parts of the input, whether within a single sequence, across multiple perspectives, or between different data modalities like text and images.

While “attention” may sound abstract, in practice it comes in several distinct forms. Each type addresses a different challenge, whether that’s capturing relationships inside a sentence, balancing multiple perspectives, or linking text to images. In 2025, three variations dominate modern AI systems: self-attention, multi-head attention, and cross-attention.

Self-attention

Each element in the input (for example, a word in a sentence) examines all other elements in the same sequence and decides which ones are most relevant.

- Example: In the phrase “The stock price fell after the earnings report,” the model learns that “earnings report” has a stronger link to “fell” than to “stock.”

- Business value: Self-attention improves tools like chatbots and enterprise search by keeping the full context in memory, not just the last sentence.

Multi-head attention

Instead of relying on a single perspective, the network utilizes multiple “heads” simultaneously. Each head looks at the input differently: one might track word position, another meaning, another relationships.

- Example: A customer review like “The delivery was slow, but the product quality was excellent” gets broken down so one head highlights “slow delivery” while another captures “excellent quality.”

- Business value: Companies can analyze mixed feedback without losing nuance, which is critical for customer service automation and sentiment analysis.

Cross-attention

This links two different types of data, such as text and images. The model aligns features across modalities to make richer predictions.

- Example: Matching a product description (“leather laptop backpack”) to its product photo.

- Business value: Cross-attention underpins multimodal search in eCommerce and media, where customers can upload a picture and instantly find the closest product match.

How attention works (step-by-step)

- Input encoding: Each word/image patch is represented as a vector.

- Query, Key, Value generation: The model transforms inputs into three vectors.

- Similarity scoring: Queries are matched against Keys to calculate how much focus each input deserves.

- Weighting Values: The most relevant inputs get the highest weights.

- Output aggregation: Weighted inputs are combined into context-aware representations.

In short: Queries ask, Keys answer, Values provide the content.

Practical business examples

- Customer support: Self-attention models can scan whole chat histories, not just the last line, enabling AI to resolve cases faster and with fewer errors.

- Product recommendations: Cross-attention improves personalization by linking text (“running shoes”) to visual product catalogs.

- Supply chain forecasting: Multi-head attention highlights which suppliers, regions, or timeframes influence demand the most.

- Fraud detection: Hybrid CNN + attention models scan transaction graphs, focusing on unusual behavior rather than brute-force checking.

Attention inside CNNs and hybrid models

Attention isn’t limited to transformers. In 2025, many convolutional neural network architectures integrate attention blocks (“attention CNNs”) to improve feature detection in medical imaging and industrial inspection. Hybrids, CNN backbones with attention layers, are now standard in vision tasks where context matters as much as detail.

Business crossover: Attention in eCommerce

The same mechanisms powering LLMs also drive smarter product discovery, personalized recommendations, and context-aware search in online retail. As one case study puts it, “If you’ve ever seen Netflix predict your weekend binge or Amazon somehow ‘know’ you need new running shoes, there’s a good chance an attention mechanism was quietly working behind the scenes.”

For a full breakdown of use cases, see Attention Mechanism in eCommerce | AI for Personalization, where we explore how attention reshapes recommendations, ranking, customer behavior analysis, and AI-driven chatbots in retail.

The attention mechanism in deep learning enables models to focus on the most relevant parts of the data, dramatically improving performance. Self-attention powers transformers, multi-head attention boosts context, and cross-attention connects text to images or audio. From AI chatbots to visual search in eCommerce, attention is the secret sauce behind most breakthrough applications.

Neural Network Architecture Design & Activation Functions

What is neural network architecture design?

Neural network architecture design is the process of structuring layers and connections to balance learning capacity with efficiency, while activation functions provide the non-linearity that allows networks to capture complex patterns. Expertise in software engineering is crucial for designing, testing, and deploying robust neural network architectures that can perform reliably in real-world applications. Together, they determine how well a model learns from data, how stable training is, and how effectively AI can scale across real-world business tasks.

Designing modern architectures

In 2025, neural architectures are rarely simple. They often combine multiple layer types:

- Convolutional layers for visual data,

- Transformer layers for sequences,

- Fully connected layers for decision-making.

The choice of structure directly impacts:

- Learning capacity (how complex patterns the model can capture),

- Training cost (how much compute is required),

- Interpretability (whether outputs can be explained).

Key 2025 trends in architecture design:

- The market for enterprise architecture tools is valued at $1.34 billion in 2025, up from $1.29 billion in 2024, reflecting a CAGR of 3.7%. Looking ahead, it’s expected to grow to $1.8 billion by 2029 at a CAGR of 7.6%.

- Hardware-optimized models: Architectures are being tuned for GPUs, TPUs, and even early-stage quantum processors to maximize throughput.

- Model compression: Pruning and quantization techniques shrink models without major accuracy loss, making them deployable on mobile or edge devices.

- Hybrid networks: Increasingly common in healthcare and eCommerce, for example, CNN backbones combined with transformers for medical imaging diagnostics.

Architecture design is not just about performance benchmarks. It’s about making sure a network is accurate, efficient, and deployable at scale.

The role of activation functions

What are activation functions in neural networks?

Activation functions are mathematical operations that introduce non-linearity into a network, allowing it to learn complex patterns instead of just straight-line relationships.

Without them, a deep network, no matter how many layers, would behave like a simple linear model.

Why they matter:

- Control gradient flow during backpropagation.

- Influence convergence speed and stability.

- Decide how signals pass through the network, shaping what patterns are learned.

Popular activation functions in 2025

- ReLU (Rectified Linear Unit): The workhorse of deep learning. Outputs zero for negatives, input value for positives. Fast, stable, and dominant across architectures.

- Leaky ReLU / Parametric ReLU: Fix the “dying ReLU” problem by allowing small negative gradients.

- ELU (Exponential Linear Unit): Improves smoothness and convergence in some tasks.

- Sigmoid & Tanh: Historically popular in classification and recurrent models, but limited by vanishing gradients. Still used in output layers.

- Binary Step & Linear: Rare today, except in very simple or teaching models.

- Experimental/custom functions: Research into niche activations like the Seagull function shows potential gains on task-specific datasets, though adoption is limited.

Comparison: Activation functions

| Function | Formula (simplified) | Best For | Pros | Cons |

| ReLU | f(x) = max(0, x) | Most deep nets | Fast, avoids vanishing gradients | Dead neurons possible |

| Leaky ReLU | f(x) = x if x>0 else αx | CNNs, RNNs | Fixes dead neurons | Slightly slower training |

| Parametric ReLU (PReLU) | Same as Leaky but α is learnable | Flexible CNNs | Learns slope for negatives | Extra parameters |

| ELU | f(x) = x if x>0 else α(e^x – 1) | CNNs, hybrid models | Smooth gradients, faster convergence | Higher compute cost |

| Sigmoid | f(x) = 1/(1+e^-x) | Binary classification | Probabilistic output | Vanishing gradient |

| Tanh | f(x) = (e^x – e^-x)/(e^x + e^-x) | RNNs, sequence tasks | Strong gradient around 0 | Vanishing gradient at extremes |

| Linear | f(x) = ax | Output layers | Simplicity | No non-linearity |

- Neural network architecture design in 2025 is shaped by automation (NAS), hardware optimization, and hybrid models, making AI faster to build and easier to deploy.

- Activation functions are the “switches” that let networks learn complex patterns. ReLU and its variants dominate, but task-specific choices still matter for speed and accuracy.

Real-World AI Applications Powered by Modern Architectures

What are real-world AI applications of neural network architectures?

Modern neural network architectures, from CNNs to transformers, power applications across healthcare, finance, retail, and manufacturing. Each architecture is suited to different data types, making them critical for industry-specific AI adoption.

Healthcare precision and diagnostics

- CNNs in medical imaging: Tools like Aidoc analyze CT scans to flag strokes or pulmonary embolisms in minutes. CNN-based models outperform human radiologists in speed and match them in accuracy.

- Transformers in drug discovery: Language models adapted to protein sequences accelerate drug development by predicting molecular interactions.

- Market impact: AI in healthcare imaging alone is projected to exceed $10 billion by 2030.

For hospitals, the takeaway is clear: architecture choice directly affects diagnostic speed and patient outcomes.

Finance forecasting and fraud prevention

- RNNs/LSTMs in forecasting: Used by banks for risk modeling and stock movement prediction.

- GANs for anomaly detection: PayPal uses adversarial networks to spot irregular patterns in transactions, preventing fraud.

- Transformers for document analysis: Automating compliance by parsing lengthy regulatory texts.

Financial services depend on architectures that balance accuracy with explainability to meet strict compliance standards.

Retail and eCommerce: personalization and search

- CNNs in visual search: Customers can upload a photo (“find shoes like these”) and get instant matches.

- Transformers in recommendations: Self-attention models personalize product feeds based on browsing and purchase history.

- Cross-attention in multimodal AI: Linking text queries with product images or videos to improve discovery.

Example: Amazon reported that recommendation systems account for 35% of its revenue, much of it powered by transformer architectures.

Manufacturing & Industry 4.0: quality and optimization

- CNNs for defect detection: AI systems scan parts on assembly lines, catching micro-defects invisible to the human eye.

- GNNs for supply chain optimization: Map supplier relationships to predict disruptions.

- Hybrid models (CNN + transformers): Used in predictive maintenance monitoring of IoT sensor data while interpreting contextual logs.

Manufacturers adopt architectures that integrate with IoT and edge devices to reduce downtime and scrap rates.

Education & Workforce Training

- Adaptive learning with RNNs and transformers: Platforms like DreamBox adjust math exercises in real time, based on student performance.

- Generative AI tutors: Transformer models act as personalized coaches across subjects, available on demand.

This is one of the fastest-growing consumer-facing applications of neural networks, with AI in education expected to surpass $20 billion by 2030.

Snapshot: AI Architectures by Sector

| Industry | Architecture in Use | Applications |

| Healthcare | CNNs, Transformers | Medical imaging, drug discovery |

| Finance | RNNs, LSTMs, GANs, Transformers | Risk forecasting, fraud detection, compliance automation |

| Retail & eCommerce | CNNs, Transformers, Cross-Attention | Visual search, product recommendations, personalization |

| Manufacturing | CNNs, GNNs, Hybrid Models | Quality control, supply chain mapping, predictive maintenance |

| Education | RNNs, Transformers | Adaptive learning, AI tutors |

Neural networks aren’t just academic models; they already shape healthcare diagnoses, fraud prevention, eCommerce personalization, industrial quality checks, and adaptive learning. Each architecture shines in its own niche: CNNs for vision, RNNs for sequences, transformers for language, GANs for data generation, and GNNs for relational problems.

Costs and Considerations of AI Implementation

What is the cost of AI implementation in 2025?

The financial side of AI is only one piece of the puzzle; project longevity, trust, and governance are equally critical. According to Gartner AI Maturity Matters, 2025, 45% of organizations with high AI maturity keep their AI projects running for three years or more, compared with just 20% of low-maturity firms. High-maturity leaders also report stronger trust from business units (57% vs. 14% in low-maturity firms) and a greater focus on robust governance and engineering practices.

The survey highlights that:

- Data quality and availability даremain the top challenge, cited by 29–34% of organizations.

- Security threats are flagged by nearly half (48%) of high-maturity leaders.

- Dedicated leadership is rising: 91% of high-maturity organizations already have appointed AI leaders, tasked with fostering innovation, building infrastructure, and aligning teams.

- Metrics matter: 63% of these organizations measure ROI, customer impact, and risks, ensuring AI projects sustain value over time.

The takeaway: getting AI costs “right” isn’t just about hardware or model training budgets. Long-term success depends on governance, metrics, and trust, which extend the lifespan and impact of AI initiatives.

Why companies struggle with cost planning

According to McKinsey (Superagency in the Workplace, 2025), nearly all companies are investing in AI, but only 1% describe themselves as mature. While 92% of leaders plan to increase AI spend in the next three years, the biggest barrier isn’t technology or employee readiness — it’s leadership alignment and underestimation of long-term costs. Employees are already using AI more than leaders realize, but without clear budgeting frameworks, many pilots stall instead of scaling.

Breaking down AI costs in 2025

| Cost Area | Typical Range | Notes |

| Model development & training | $50,000 – $500,000+ | Custom deep neural networks; larger models like GPT-scale exceed $10M |

| Fine-tuning pre-trained models | $10,000 – $100,000 | Popular for adapting LLMs/transformers to niche business tasks |

| Data labeling & annotation | $10,000 – $250,000+ | Major hidden cost, varies with dataset size and complexity |

| Infrastructure (cloud) | $5,000 – $100,000 / year | AWS, Azure, Google Cloud; pay-as-you-go scalability |

| Infrastructure (on-premise) | $50,000 – $1M+ | AI servers and GPUs; high upfront cost, lower long-term spend |

| Enterprise-scale implementation | $500,000 – several million | Includes integration, compliance, governance |

| Talent & expertise | Salaries rising 20–30% YoY | Scarcity of ML engineers and data scientists inflates costs |

Training frontier models (e.g., GPT-4, Gemini-class) can exceed $10 million in compute costs alone.

Ongoing and hidden costs

- Maintenance & retraining: Continuous updates are required to prevent “model drift.”

- Compliance & governance: Early investment here reduces legal risks, especially in finance and healthcare.

- Talent churn: High turnover of AI specialists inflates recruitment and retention costs.

- Integration errors: Scaling from pilot to production can cause cost overruns of 500–1000% if underestimated.

Smarter budgeting strategies

- Start with pilots ($50,000–$150,000) before scaling enterprise-wide.

- Use open-source frameworks (e.g., PyTorch, Hugging Face) to cut licensing costs.

- Lean on cloud AI services for flexible scaling, instead of buying heavy hardware upfront.

- Plan for compliance and governance early, not after launch.

AI implementation in 2025 is costly but manageable with clear planning.

- Small pilots: $50K–$150K

- Custom models: $50K–$500K+

- Enterprise rollouts: $500K–several million

- Cutting-edge LLMs: $10M+

The main risk is underestimating. Companies that plan for hidden costs (data, integration, governance) are far more likely to scale AI without burning budgets.

AI Adoption in Enterprises (Trends, Challenges, and Success Factors)

What does AI adoption in enterprises look like in 2025?

AI adoption is nearly universal, but maturity is rare. According to McKinsey (Superagency in the Workplace, 2025), almost every company is investing in AI, yet just 1% call themselves “mature.” Meanwhile, Gartner (2025) reports that 45% of high-maturity organizations keep AI projects operational for at least three years, compared to only 20% of low-maturity ones. The gap lies not in employee readiness, most workers are already utilizing AI, but in leadership alignment, governance, and long-term strategy.

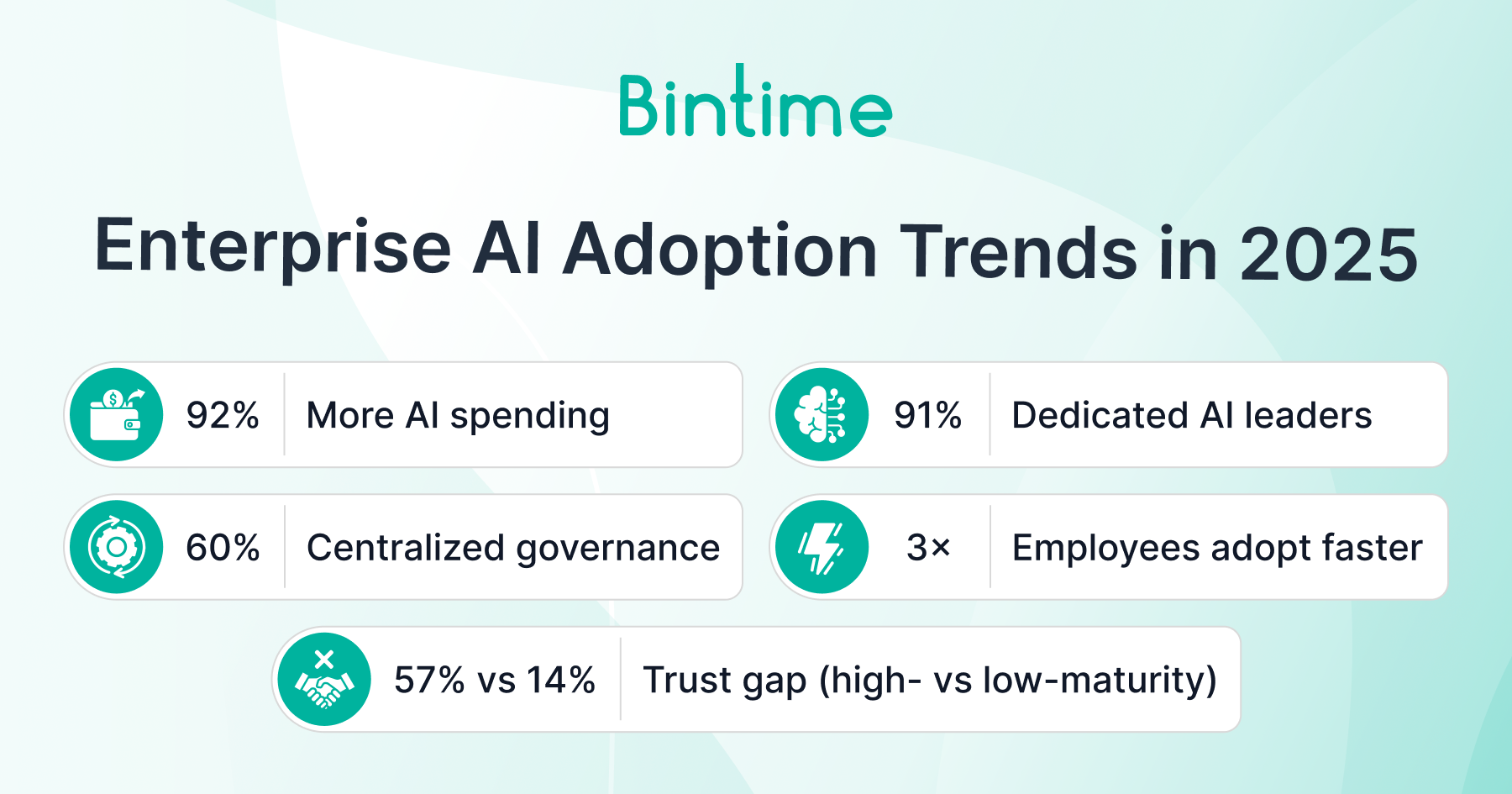

Enterprise AI Adoption Trends in 2025

- Near-universal investment: 92% of leaders plan to increase AI spending in the next three years (McKinsey, Superagency in the Workplace, 2025).

- Trust drives adoption: 57% of business units in high-maturity firms trust AI systems, vs. only 14% in low-maturity ones (Gartner, 2025).

- Centralized governance: Nearly 60% of high-maturity organizations have centralized AI strategy, governance, and infrastructure.

- Dedicated leadership: 91% of high-maturity companies now appoint AI leaders to oversee architecture, innovation, and metrics.

- Employee readiness: McKinsey found employees use AI tools at 3x the rate their leaders assume, signaling untapped bottom-up adoption potential.

Key Challenges Holding Enterprises Back

- Leadership misalignment — Without executive consensus on AI priorities, pilots stall.

- Data quality and availability — 29–34% of leaders cite it as the top barrier (Gartner, 2025).

- Security and compliance risks — Nearly half of high-maturity firms identify security threats as their biggest concern.

- Scaling costs — As highlighted in Section 6, moving from pilot to production can inflate costs by 500–1000%.

- Lack of metrics — Only 63% of mature organizations quantify AI success; the rest risk losing track of ROI.

Success Factors in Enterprise AI Adoption

- Choose projects by value + feasibility: High-maturity firms prioritize initiatives where AI impact is measurable and business-aligned.

- Invest in governance early: Firms that establish security, compliance, and data policies upfront avoid expensive rework later.

- Create dedicated leadership roles: AI leaders focus on infrastructure, teams, and innovation, ensuring alignment across departments.

- Measure impact with metrics: Mature organizations use ROI, customer impact, and risk analysis to sustain projects over years.

- Build employee trust: Adoption scales faster when employees understand and trust AI outputs. Transparency is non-negotiable.

In 2025, AI adoption is everywhere, but maturity is rare. Success depends less on the technology itself and more on leadership, governance, and trust. Enterprises that align executive vision, appoint AI leaders, enforce data quality, and measure outcomes are the ones turning pilots into lasting impact.

Future Outlook — Where Neural Networks and Attention Mechanisms Are Headed

What’s next for neural networks and attention mechanisms?

By 2030, neural networks will evolve into more efficient, interpretable, and specialized architectures, with attention mechanisms expanding beyond language into vision, multimodal AI, and edge deployments. The trend isn’t bigger models at any cost; it’s smarter, leaner, and more aligned with real-world business needs.

Key directions in the near term (2025–2027)

- Multimodal AI as standard: Transformers with cross-attention will unify text, image, audio, and video into single models. Already, OpenAI’s Sora and Google’s Gemini point in this direction.

- Hybrid architectures: CNNs + attention modules are becoming common in vision tasks (healthcare imaging, industrial inspection). Expect more “mix-and-match” models instead of single dominant architectures.

- Efficiency first: Model compression (quantization, pruning) and small language models (SLMs) are gaining traction as companies shift from scaling at all costs to right-sizing for business tasks.

- Enterprise-ready governance: As Gartner (2025) highlights, dedicated AI leaders and governance frameworks are becoming the norm, not the exception. This makes future AI less of a “black box” and more of a controlled, measurable business tool.

Mid-term (2027–2030)

- Edge deployment of attention models: Running transformer-like models on smaller devices, from medical scanners to supply-chain sensors, to process data locally and reduce latency.

- Automated design maturity: Neural Architecture Search (NAS) will be fully integrated into enterprise platforms, drastically reducing design cycles and tailoring architectures to specific domains (e.g., retail forecasting, drug discovery).

- Trust and interpretability: A growing body of research and regulation will force companies to adopt explainable architectures. Businesses won’t just ask “Does it work?” but “Can I prove why it worked?”

- Quantum influence: Early quantum processors will begin to shape neural network design, particularly for optimization-intensive tasks such as logistics or materials science.

What this means for business leaders

- Expect costs to normalize: Training frontier models may always cost millions, but enterprise-grade neural networks will become cheaper, thanks to model compression and cloud optimization.

- Focus shifts from pilots to portfolios: AI will no longer be a handful of experiments; it will be embedded into multiple functions simultaneously (finance, HR, supply chain, marketing).

- Adoption will depend on trust: Transparency, metrics, and governance will be as important as accuracy. Organizations that fail here risk falling behind even if their models are technically superior.

The future of neural networks and attention isn’t just about bigger models; it’s about better fits. Expect leaner architectures, multimodal AI, hybrid systems, and enterprise governance to dominate the rest of the decade. For businesses, the winners will be those who treat AI not as a pilot project but as an operational pillar, built on transparency and trust.

Conclusion & CTA

Neural networks don’t succeed on horsepower alone; they win on fit. The right architecture shapes how data flows, how signals combine, and how reliably a model learns. That’s why CNNs excel at images, transformers at language and multimodal tasks, and GNNs at relationships like supply chains. Get this match wrong and budgets evaporate in pilots. Get it right and models move from demo to durable results.

For retailers and brands, the stakes are especially clear. In AI for eCommerce, architecture choice defines whether recommendation engines feel relevant, search functions return what customers really want, and fraud checks happen fast enough to stop bad actors without blocking good buyers. The line between abandoned carts and increased revenue often runs straight through the model design.

Struggling with product discovery, forecasting demand, spotting fraud, or scaling customer support? Start small with a pilot that delivers results in weeks, not years. We’ll prove the ROI, fine-tune the architecture, and leave you with a clear path from prototype to production.

FAQ

What are the different types of neural networks?

The main types include Convolutional Neural Networks (CNNs) for images, Recurrent Neural Networks (RNNs) for sequences, Long Short-Term Memory (LSTM) networks for long dependencies, Transformers for attention-based tasks, and Generative Adversarial Networks (GANs) for data generation. Each serves different data types and business applications.

Why is neural network architecture important?

Architecture determines how data flows through the network and what patterns it can learn. A well-designed architecture balances accuracy, training cost, and interpretability, making it possible to scale AI solutions reliably in industries like healthcare, finance, and retail.

What is the attention mechanism in deep learning?

Attention is a method that lets models focus on the most relevant parts of the input rather than treating everything equally. This makes AI better at understanding language, matching text with images, and generating accurate predictions across complex data.

How much does it cost to implement AI in 2025?

Costs range widely: pilot projects can start at $50,000, while enterprise rollouts run into the millions. Large-scale training of frontier models like GPT-class systems can exceed $10 million. Businesses often underestimate costs by 30–40%, especially for data preparation and integration.

What are the main challenges of AI adoption in enterprises?

Common barriers include poor data quality, lack of leadership alignment, high scaling costs, and compliance risks. According to Gartner (2025), trust and governance are the biggest differentiators that high-maturity organizations sustain AI projects for 3+ years by focusing on metrics and dedicated leadership.

Where are neural networks headed in the future?

By 2030, AI models will be smaller, more efficient, and more interpretable. Expect hybrid CNN–transformer systems, multimodal AI (text, image, audio), and edge deployment to become standard. Governance and transparency will matter as much as accuracy for enterprise adoption.