Have you ever wondered how your phone recognizes your face, or how self-driving cars spot pedestrians and road signs? At the heart of these technologies are convolutional neural networks (CNNs), a type of artificial intelligence inspired by how our brains process images. However, just like humans sometimes miss details, CNNs also face challenges in focusing on what’s important in an image.

But don’t worry—there’s a way to teach these networks to “pay attention” better! Let’s explore how the fascinating “attention mechanism” works and why it’s revolutionizing image recognition and beyond.

What Are Convolutional Neural Networks (CNNs)?

Think of CNNs as high-tech image detectives. They excel at scanning pictures to identify patterns—like edges, textures, and shapes—and use these patterns to recognize objects, whether it’s a cat, a stop sign, or a face.

CNNs achieve this through two main operations:

- Convolutional Layers: These are like magnifying glasses, zooming in on small areas of an image to detect features.

- Pooling Layers: These summarize and compress details, making the network more efficient and better at handling small changes in the image.

However, CNNs aren’t flawless. They sometimes get sidetracked by irrelevant details or struggle to link distant features within an image. That’s where the attention mechanism becomes a game-changer.

The Convolution Operation

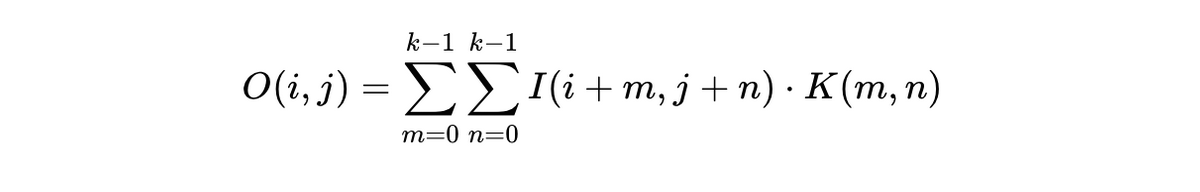

At its core, the convolutional layer performs a mathematical operation on the input image, which can be expressed as:

Here:

Here:

- I is the input image of a certain size.

- K is the convolution kernel (typically a 3×3 or 5×5 matrix), which acts like a filter scanning over the image.

- O(i, j) is the resulting feature map, representing patterns detected by the kernel.

This operation captures local image features (like edges), ensures small shifts don’t drastically affect recognition, and is computationally cheaper than traditional methods.

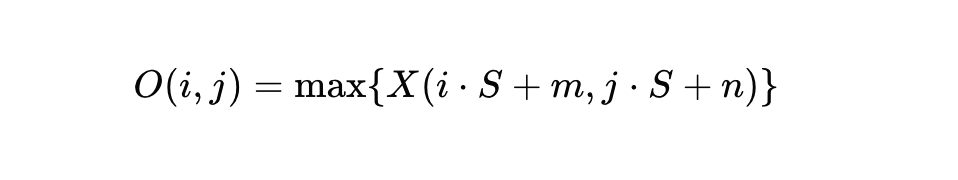

After convolution, a pooling layer is used. This is essentially an operation that generalizes or aggregates the output features obtained from the convolutional layer while reducing the feature space dimensions with a step S.

m, n are, respectively, the indices within the pooling window.

m, n are, respectively, the indices within the pooling window.

Pooling helps CNNs become more robust to minor variations, like small shifts in objects.

Challenges of CNNs

Despite their incredible capabilities, CNNs face limitations:

- Limited focus on image details: They sometimes struggle to distinguish important features from irrelevant ones.

- Computational complexity: Processing high-dimensional data often requires significant resources.

- Insensitivity to distant connections: Features far apart in an image may be overlooked due to the localized nature of CNN filters.

These challenges make CNNs a good candidate for enhancement through attention mechanisms.

What Is the Attention Mechanism?

Imagine reading a book where some words are bolded to help you focus on the most important parts. The attention mechanism does something similar. It helps CNNs zero in on the critical features of an image while ignoring the irrelevant bits.

Originally developed for natural language processing (like translating languages), attention mechanisms are now making waves in image recognition. They guide CNNs to allocate more “brainpower” to significant areas of a picture, improving accuracy without demanding too much extra computing power.

How Does Attention Work?

Attention can work in a few ways:

- Channel Attention: This focuses on specific “channels” or layers of features, like textures or colors.

- Spatial Attention: This zooms in on certain areas of the image, like a pedestrian in a busy street scene.

- Combined Models: Advanced methods, like Squeeze-and-Excitation Networks (SENet), blend both channel and spatial attention for even better results.

Integrating attention into CNNs often involves adding special “attention layers” that act like highlighters, enhancing the most important features before sending them to the next stage of analysis.

Integrating the Attention Mechanism into Classical CNNs

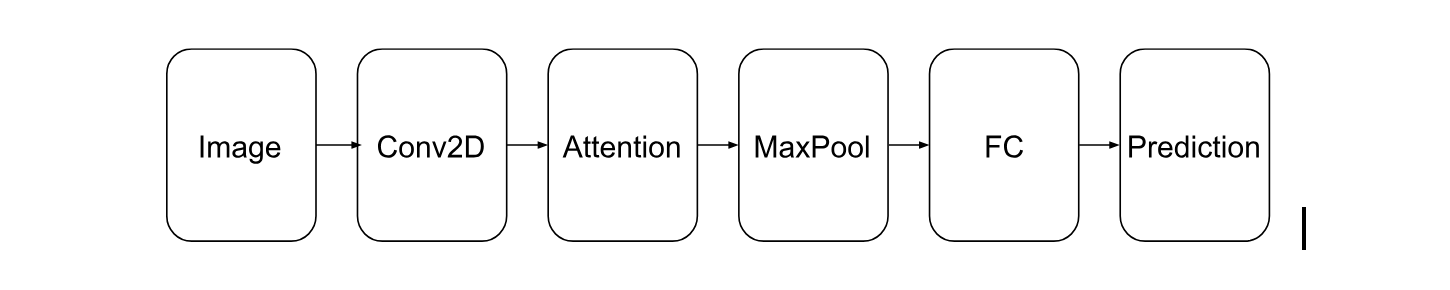

Attention mechanisms are often implemented into CNN architectures to dynamically focus on the most relevant features in the data. A simplified architecture of a CNN enhanced with attention mechanisms is shown below:

In this implementation:

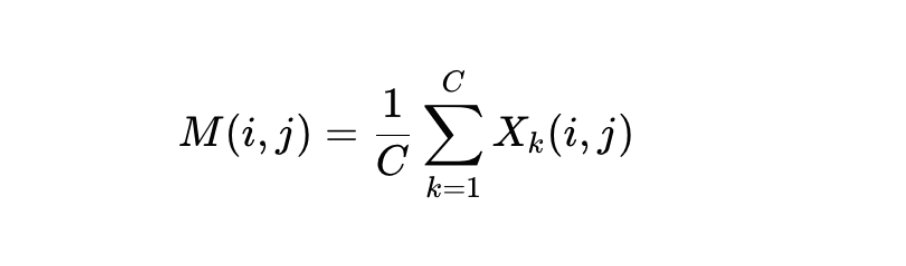

- The attention map is calculated using normalized global average pooling across channels:

Where:

- C is the number of channels.

- X is the feature tensor.

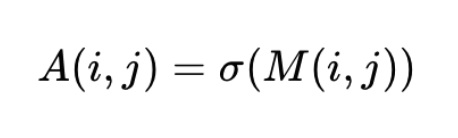

- Normalization of the obtained attention map is performed using the sigmoid function:

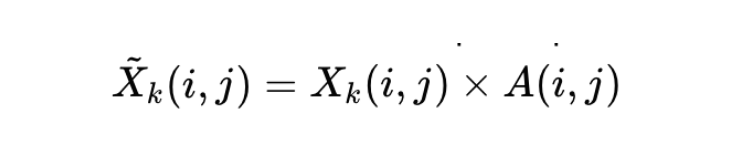

- Feature reweighting is done to enhance or suppress features from the convolutional layer:

These reweighted features are then passed to the fully connected (FC) layer for final predictions.

Why Is Attention Important?

Attention mechanisms are game changers because they:

- Improve Accuracy: They help CNNs make better decisions, whether spotting tumors in medical scans or identifying hazards for autonomous vehicles.

- Reduce Complexity: By focusing only on what matters, they avoid wasting computing power on unnecessary details.

- Boost Adaptability: They make CNNs more flexible, capable of handling different tasks with ease.

For example, advanced attention-based models like Vision Transformers (ViT) are so effective that they sometimes ditch traditional CNN layers entirely, relying solely on attention mechanisms.

Real-World Impact and Future Potential

Attention mechanisms are already transforming critical fields like autonomous transportation and healthcare. Imagine a self-driving car that spots a jaywalker in time, or a diagnostic tool that detects diseases faster and more accurately.

However, there’s still room to grow. Researchers are exploring how to balance the benefits of attention mechanisms with their added computational demands. After all, every extra layer in a model requires more resources, so the goal is to find the sweet spot.

In a Nutshell

By teaching neural networks to “pay attention,” we’re not just improving their vision—we’re making them smarter and more efficient. From self-driving cars to cutting-edge medical tools, the potential applications are endless. And as attention mechanisms continue to evolve, we can expect even more breakthroughs in how machines see and understand the world.

Reference List

[1]

- Woo, J. Park, J. Y. Lee, and I. S. Kweon, “CBAM: Convolutional block attention module,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2018. doi: 10.1007/978-3-030-01234-2_1.

[2]

- Hu, L. Shen, S. Albanie, G. Sun, and E. Wu, “Squeeze-and-Excitation Networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 8, 2020, doi: 10.1109/TPAMI.2019.2913372.

[3]

- Jetley, N. A. Lord, N. Lee, and P. H. S. Torr, “Learn to pay attention,” in 6th International Conference on Learning Representations, ICLR 2018 – Conference Track Proceedings, 2018.

[4]

- Draelos. “Learn to pay attention! Trainable visual attention in cnns.” Medium. Accessed: Oct. 29, 2024. [Online]. Available: https://medium.com/p/87e2869f89f1

[5]

- Weng. “Attention? Attention!” Lil’Log. Accessed: Oct. 29, 2024. [Online]. Available: https://lilianweng.github.io/posts/2018-06-24-attention/