Neural networks, algorithms designed to simulate the neural connections in the brains of biological entities, have become pivotal in solving various machine learning tasks. In this tech piece, we delve into the types, internal structures, and operational characteristics of neural networks, providing examples to illustrate their application and efficacy.

Machine Learning Problem Formulation

In machine learning, the primary goal is to find an algorithm that can learn from data without explicit programming and make accurate predictions on unseen datasets. Formally, this involves searching for an algorithm f∈H (hypothesis space) that minimizes the expected error E.

minE = min∑[L(f(x),y)]

f∈H (x,y)∼D f∈H (x,y)∼D

Where:

- X is the space of input data.

- Y is the space of output data.

- E is the loss function over a random sample of data from the probabilistic distribution D on X×Y.

As the learning experience increases, the losses should decrease, indicating improved performance of the neural network.

Applications of Neural Networks

Neural networks can be applied in various fields, including:

|

|

Modern Applications Examples

Neural networks are currently used in critical applications such as:

- Autonomous transportation. Tesla’s Autopilot system utilizes deep neural networks to analyze and interpret data from cameras and sensors around the vehicle. This enables the car to drive itself on highways, navigate through traffic, and even park autonomously.

- Medical diagnostics and intervention. Aidoc uses neural networks to enhance radiology workflows by providing AI-powered solutions that analyze medical images for critical conditions. The system assists radiologists by quickly identifying abnormalities in CT scans, such as brain hemorrhages or pulmonary embolisms, leading to faster and more accurate diagnoses.

- Personalized education. DreamBox is an adaptive learning platform that uses neural networks to provide personalized math instruction. The system adjusts the difficulty and type of problems based on the student’s performance, offering a tailored educational experience.

- Fraud detection. PayPal employs neural networks to monitor transactions for fraudulent activity. The system analyzes transaction patterns and identifies anomalies that could indicate fraudulent behavior, helping to protect users from financial fraud.

- Agriculture. John Deere uses neural networks in its precision agriculture technology to optimize planting, fertilizing, and harvesting. The system analyzes data from sensors and satellite images to make recommendations that increase crop yields and reduce resource use.

- Robotics. Boston Dynamics’ robots, such as Spot, utilize neural networks for navigation and task execution. Spot can traverse rough terrain, avoid obstacles, and perform complex tasks like opening doors, all thanks to advanced neural network algorithms.

- Language interaction. Google Assistant uses recurrent neural networks (RNNs) and transformers to understand and respond to natural language queries. This allows users to interact with their devices using conversational language, making technology more accessible and intuitive.

- Generative tasks. OpenAI’s GPT-4, a transformer-based language model, generates human-like text based on a given prompt. It can write essays, create poetry, and even generate code, showing the capabilities of neural networks in creative and generative tasks.

Virtually every human activity domain has potential applications for neural networks, demonstrating their versatility and importance.

Types of Neural Network Architectures

Neural networks come in various architectures, most of which mimic the structure of neurons in the human brain, though some deviate from direct biological models.

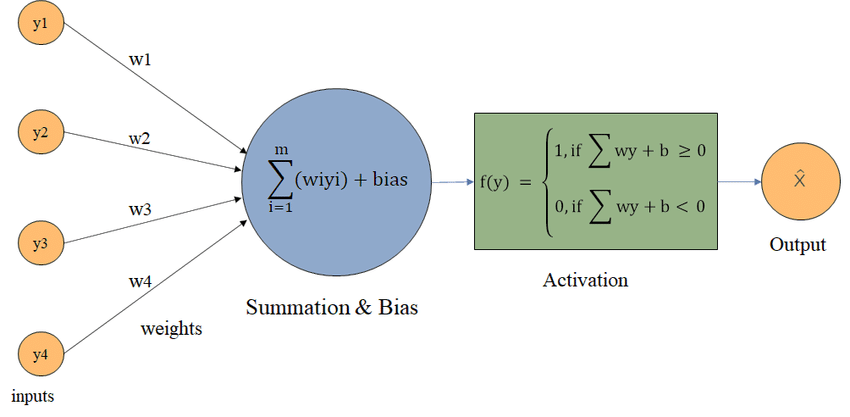

1. Perceptron

Source: Research Gate

Introduced by Frank Rosenblatt in 1958, the perceptron calculates f(x)=w⋅x+b, with the activation function often being the Heaviside step function. It is used for linearly separable tasks and simulates the functioning of a brain neuron, serving as the foundational structure of modern networks.

Perceptron Learning

For each data set in the training set, the perceptron’s prediction value is calculated. For each ii, we calculate:

Δwi=η(t−o)xi — Where t and o are the target and obtained results, respectively.

wi=wi+Δwi

Here, η is the learning coefficient. This process is repeated until an acceptable prediction error is achieved, essentially employing the gradient descent method.

2. Multilayer Perceptron (MLP)

Source: Research Gate

Source: Research Gate

Each node in an MLP is a perceptron, with each subsequent layer receiving signals from the previous layer. MLPs can handle non-linear functions, typically using sigmoid or other differentiable functions as activation functions, offering greater capacity.

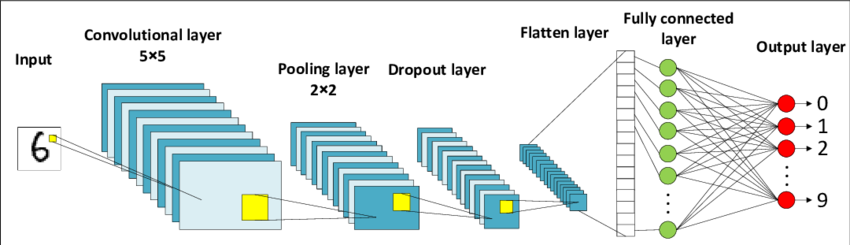

3. Convolutional Neural Networks (CNNs)

Source: Research Gate

Inspired by the biological models of the brain’s visual cortex, CNNs use two primary operations:

- Convolution of input data using a filter matrix;

- Pooling, which reduces dimensionality by enhancing/averaging values of neighboring data.

The extracted feature set is then transformed into a one-dimensional vector (flattening). CNNs are commonly used for image classification, facial recognition, and object detection in images.

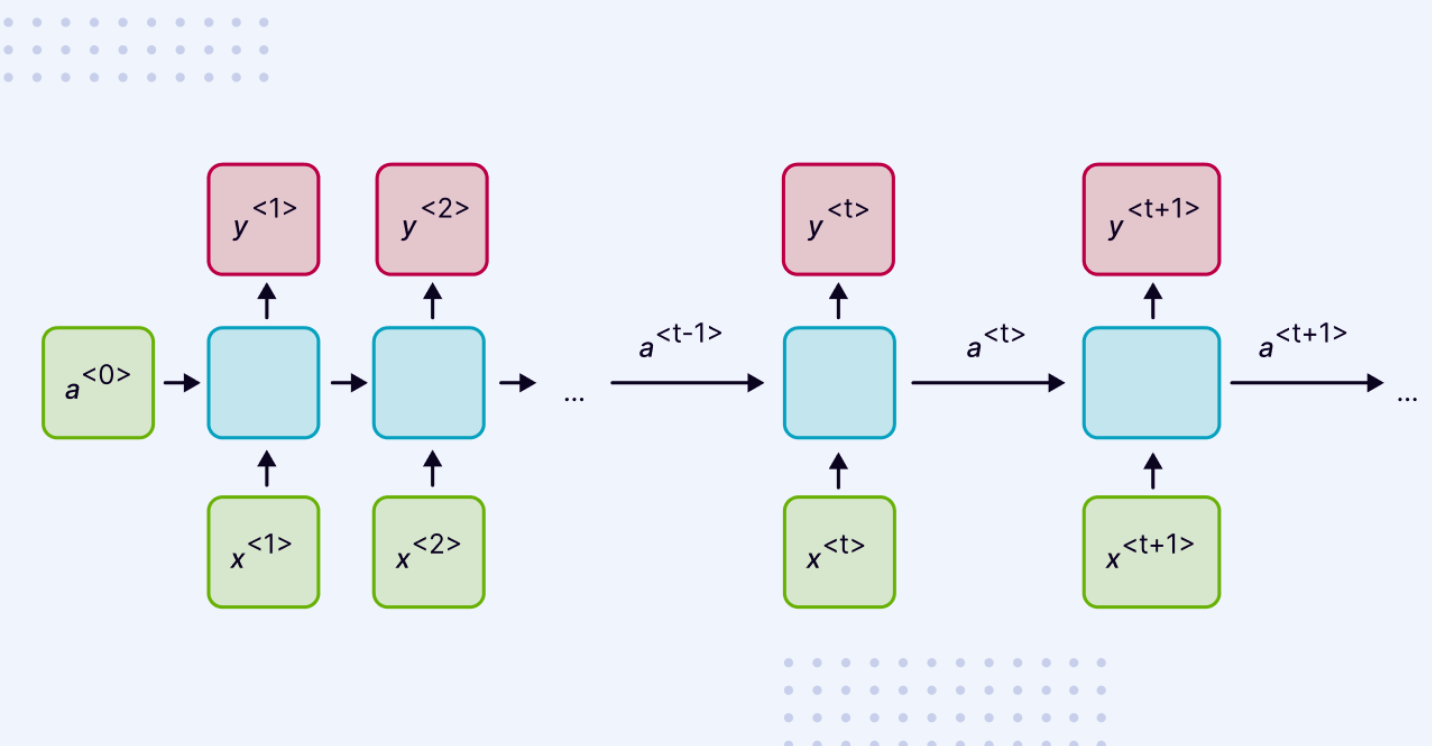

4. Recurrent Neural Networks (RNNs)

Source: DocSumo

RNNs use the output signal from deep layers obtained in previous computations alongside current data. They are applicable to:

- Sentiment analysis;

- Music generation;

- Voice recognition and other time series data;

- Machine translation;

- Text analysis and named entity recognition;

- Sequential computation.

5. Transformers

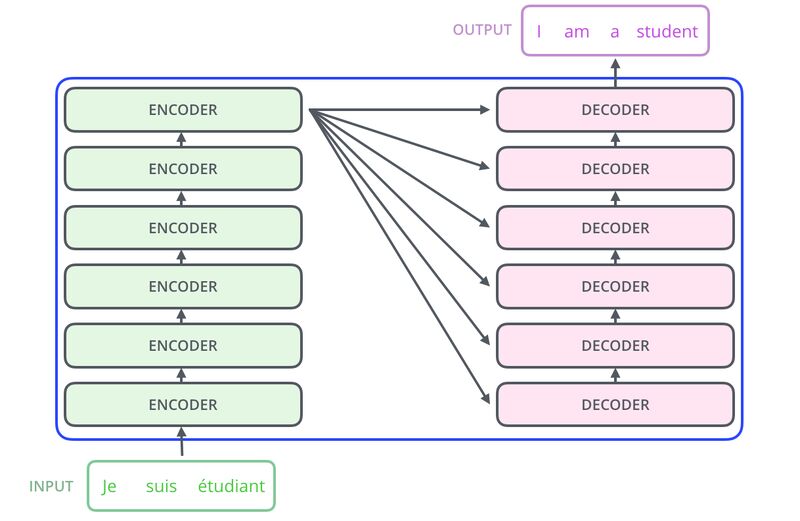

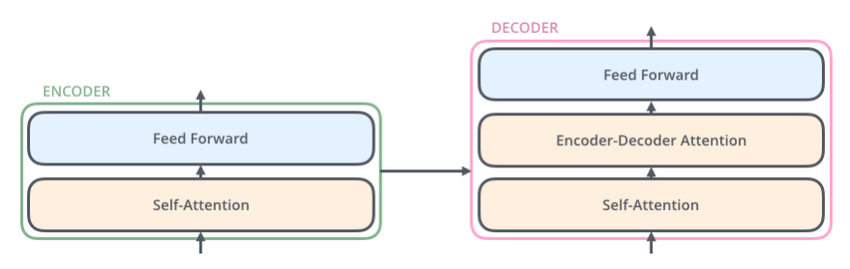

Source: GitHub

Transformers consist of stacks of encoders and decoders, utilizing attention and self-attention mechanisms in each encoder and decoder.

Source: Research Gate

Most modern AI services are built using transformers. Transformers are widely used in:

- Machine translation

- Language processing

- Time series prediction

- Text generation

- Biological sequence analysis

- Video recognition/understanding

- Text summarization

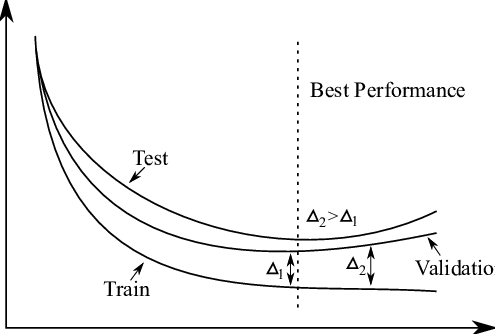

Challenges in Neural Networks

Neural networks face several challenges, including:

- Generalization: Ability to perform well on data outside the training set

- Underfitting: Inability to achieve low error during training

- Overfitting: Discrepancy between training and test error

- Capacity: Managing the model’s propensity for underfitting or overfitting

Source: Research Gate

Real-Life Examples of Neural Network Models

OmniVec by OpenAI

Source: Semantic Scholar

Source: Semantic Scholar

OmniVec is a commercial product that uses a shared vector space derived from different modalities, with training incorporating all available modalities.

Mistral 7B by Mistral

Source: Medium

Mistral 7B is an open-source model that surpasses well-known models like LLaMA by using sliding window attention, significantly reducing the number of tokens.

Conclusion

The rapid advancement of modern computational capabilities has spurred a renaissance in interest in neural networks. Expectations from artificial intelligence now dictate computational capabilities. Contemporary generative networks can solve most typical tasks, approaching the proficiency of university students in various subjects within just a few years of rapid development. Consequently, the next revolution will likely be intellectual, bringing significant social and economic shifts.